Quick Guide to A/B Test your Live Chat Setup

"meta name="robots" content="noindex"/>There are many ways you can integrate live chat on your website. Many decisions to be made about the looks and behaviour of your chat system. How to know whether you have the right setup? You can, through A/B testing.

The other day, a colleague not-so-familiar with digital marketing asked to explain him what exactly A/B testing was and how it could be used to improve their live chat performance, in an easy way. I thought about my five year old brother and how could I make him understand this process of A/B testing.

“Ok, so imagine you have two cars. The red and the blue one over there (I used pens during the explanation due to the lack of cars on my desk). Next week you will have a very big race, where you should take your fastest car. How would you pick one? Probably, you would mount your racing track, test out the red car, measure the performance and then, in the same conditions, try out the blue one. In the end, you will choose the one with which you finished the track in the shortest time, right?”

That's A/B testing in a nutshell. It's a method to compare marketing campaigns, landing pages or design elements - it can be anything - and know which one is more likely to bring you the best results. When you do A/B testing on your website you guide a group of visitors to one page and another group to a similar page with a small change. This change can be a different call-to-action button, a video, or another image, but you can also use A/B testing to measure the impact of live chat and its different setups. Let’s see how.

Important Points to Keep in Mind

- Test only one change at a time - If you compare setups in which many points are different (e.g. the button position, color, and chat behaviour) it is impossible to judge what change or changes resulted in the better score. So instead, take the exact same setup and change 1 element at a time, e.g. button color.

- Only make conclusions based on significant results - When page A shows a conversion rate of 10% and page B one of 15%, it doesn't mean you should go for page B if each page didn't have more than 10 visitors in your test. VWO offers an A/B test significance calculator sheet that might be of use to you. When your website doesn't have so many visitors, you will have to let your test run for a longer time to achieve significance.

- Make sure all elements function properly across browsers - When we just started out with Userlike we wanted to test the effect of a video on a landing page. So we sent 50% of that page's visitors to the version with, and 50% of the visitors to the version without the video. However, after a few days we realised that the video wasn't working properly on Internet Explorer and older versions of Firefox, distorting our results and leaving us without a conclusion.

- Use common sense - Even when you get significant results, you shouldn't jump to conclusions without using common sense. To use the previous example: it's probably better to have no video on your landing page than a bad amateurish video. That doesn't mean a video is a bad idea by itself.

The A/B Testing Process for Live Chat

1. Live Chat or No Live Chat

The most basic A/B test for live chat is the choice between Situation A: Live Chat, or Situation B: No Live Chat. If our experiences are correct, live chat should be the winning option to fulfil business goals such as an increase in customer satisfaction, conversion, leads collection, etc.

For this first A/B test you should start with the setup that you think will work best. E.g. when you are A/B testing for your check out page which has an average visit time of 40 seconds, set a proactive chat to open on 50 seconds to help people that seem to be experiencing an issue. Do what you think works best, then start diverting from there one step at a time with different live chat setups.

2. Different Live Chat Setups

After implementing live chat you can start doing A/B experiments for different live chat setups and measure which have the better results. It's important that you do tests or compare results from the same page to make sure results are not affected by external elements not being measured.

There are many different elements which you can A/B test. Once again, make sure you only test one at once in two different setups. Some of our suggestions include trying out different colours of the live chat button, different positions for the button or different wording of the live chat button message. These are part of the layout elements which also include the layout of the button, the welcoming messages, font used, operator picture and so on.

Button Position: The Button Position is a setting that allows you to choose where you would like to see you live chat button appearing. From own tests we can say that the right bottom corner results in the highest user interactions. However, with A/B testing you can realise what the best location for your widget is.

Button Colour: This colour determines to a large extend the visibility of your chat service. Different colours trigger different responses, so it is important to test the reaction of your website visitors to the various options.

Button Wording: The wording of the button refers to the word or sentence appearing on the live chat button. It can be something actionable such “Talk to us!” or more invitational like “Do you have questions?”.

Chat Starter: Also differences in live chat behaviour that can be tested. There are three main live chat approach options (how the chat starts). A normal approach where the chat starts after the web visitor clicks on the live chat button, the proactive chat which is triggered automatically inviting the client to chat, or the register approach which requires the fulfilment of a registration form before the start of a chat. Finally, you can also use our API to further customise advanced chat behavior.

Support Groups: You can also test the usage of different support groups to respond to the customer. Some options include providing multi-language widgets (depending on customer location), department-related widgets (separate chats by Marketing, Sales, IT, Support) or function-related (Information about Shipping, Information about Product, Information about Checkout are just some examples).

Offline Behaviour: Offline behaviour determines what happens when none of your support agents is online and available for a chat. There are also three different setups you can choose from. The general “Feedback Form” collects the visitor’s name, email, a message and allows the option for users to upload a screenshot. The “Notification” is a simple message you can leave to customers when you are not available, something like “We are not around to chat at the moment”. Finally, you can also disable the live chat button so no customers sees the button unless there is an agent to talk to.

Comparing Results

Setting comprehensive result metrics is as important as defining the elements to be tested. Therefore, understanding what can be measured and what information tells you is the second step in implementing your A/B live chat test.

According to our own analysis, some of the best result data for comparison are:

- Number of chats received

- Conversion rate

- Number of leads collected

- Service ratings by users

It is then time to implement the best combined results into a single solution. This tends to be the most successful solution, but it's important to compare its results to the setups you tested before and make sure this is really the case.

Setting up A/B Testing with Userlike live chat

Integrate Userlike live chat with tracking: To add a tracking tool to your live chat solution, simply go to your widget editor, click on the tab “Behaviour” and then select “Tracking”. From the given list choose your desired tracking tool and follow the setup tutorial .

Create two widgets with different settings:

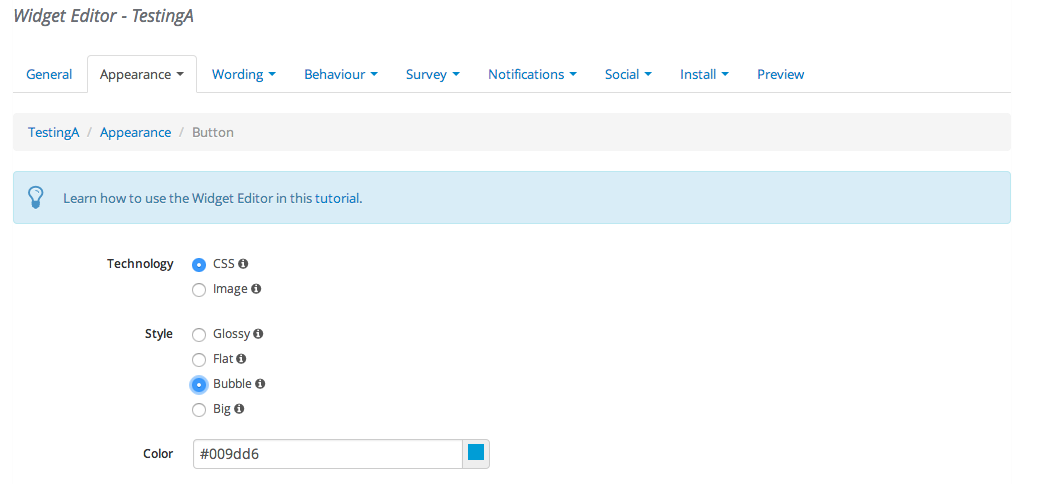

- Layout: In this example we are only testing the style of the live chat button

- Wording: In this example we will test different wording for the widget button

- Behaviour: Now, let’s test different widget behaviours

- Surveys: It is now time to check results from different surveys. One widget will have an initial survey, the other won’t.

- Social Buttons: Finally, if you want to check the increase of social media followers coming from live chat you can implement the following set up.

Tools for A/B testing:

- Google Content Experiments : A free tool which allows you to test up to 10 different variations at once. This tool is more recommended if you are testing two pages at once as it compares the behavior on both simultaneously.

- Optimizely : This tool allows you to add custom goal tracking related to a wide range of possible actions which can be taken by your customers. Optimizely can be set and optimised by both coding and non-coding employees.

- Visual Website Optimizer : A designful solution to adapt A/B testing to your website. Easy to add comparison solutions and analyse results.

If you have any questions during the implementation of your live chat A/B testing just let us know through our live chat or by the e-mail support@userlike.com, we are glad to help.